|

For the last twenty years, computer game developers have been on a perpetual quest for ever greater realism in the graphics of their games. These days, any real-time 3D, character-based game using human characters that does not include moving mouths, fully articulated hands, and physics-based cloth and hair will be laughed off the shelves of the local software store. But does that heightened realism necessarily lead to a better gameplay experience for the player? In some cases, yes. Characters with moving mouths look much better than those without when they're delivering lines of dialog, and almost all players will remark on this. But only some players will notice that all the digits of the characters' hands are individually modeled, while some wouldn't notice or care if the players had simplified "mittens" for hands instead. On the other hand, procedural hair may produce effects so subtle that most players couldn't tell the difference if asked. Computer games are just reaching the point visually where developers are starting to question whether they need to employ more polygons and more complex animation techniques, or if they just need to more creatively exploit the assets they already have. More and more games are attempting to move away from the hyper-realistic look games have attempted for years, resulting in some titles opting for an entirely more cartoony look. One needs only look at the games that exploit cell-renderers to see how this can work to a title's benefit: Jet Grind Radio for the Sega Dreamcast, the forthcoming Herdy Gerdy for the Sony PlayStation 2, or Cel Damage for the Microsoft X-Box are all great looking games where realism is not even attempted. The recent Batman: Vengeance was able to completely duplicate the stylish animated look of the popular Batman cartoon, allowing gamers to explore a game-world with the same visual quality as what they watch on traditional TV. Recently, many Western game fans and developers were shocked to find out that the new Zelda game from Nintendo would employ an extremely cartoony, cell animation style, prompting concerns from within the industry that revered Zelda designer Shigeru Miyamoto may have finally lost his golden touch. More likely, Miyamoto realizes that making a more high-polygon and hyper-realistic Zelda game might impress the techies, but an extremely stylized yet beautiful cartoony look can be the darling of a much larger audience. What is it about cartoon style graphics that can be so compelling to the general public? They certainly look far less "real" than photographs or live-action films, yet well-made cartoons can evoke as much emotional response from an audience as their photographic equivalents. Perhaps cartoonists know something about drawing the human form in a reductive and exaggerated way that has the power to move people in a way highly realistic drawings cannot? Veteran game designer and interactive storytelling specialist Chris Crawford tackles that very issue in this month's column. - Richard Rouse III

Artists Against Anatomists

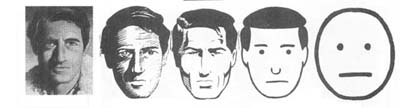

The human nose is significantly cooler than body temperature; for this reason, it appears darker in infrared images of the face. Therefore, should not graphics displays of human faces draw the nose a bit darker than the rest of the face? The suggestion is absurd on its face because, as we all know, the human eye cannot perceive infrared radiation. To include visual information that the eye cannot perceive is a waste of time. The significance of my silly suggestion is to highlight the difference between optical reality and perceptual reality. The human eye is not a video camera; it does not perceive the world as it really is. Through millions of years of evolution it has been finely tuned to hone in on those components of the visual field that are significant to the individual's survival, and to ignore all else. If my genes don't care about it, I simply don't see it. This little lesson has value to designers of graphics algorithms, for it seems to me that the greatest shortcoming in computer graphics - at least as used in entertainment products - is a cold, mechanical air. The images are excellent representations of optical reality, but they just don't cut the mustard in terms of perceptual reality. A simple example of the power of perceptual reality can be found in a phenomenon I refer to as "visual vibrato". In music, vibrato is the deliberate oscillation of a performed note around the specified frequency. A violinist can produce the effect by rapidly quivering the finger on the string as the note is played. The effect is pleasing because it triggers within the human auditory system some discriminators that are keyed to changes in frequency rather than frequency itself, thereby heightening the intensity of the auditory experience. Much the same thing happens in the human visual system. It's a difficult phenomenon to notice, because its direct effects are later subtracted out of the perceived visual experience by higher-level processing. The human eye is never at rest; it is constantly darting about, sampling different sectors of the visual field. Any given object is sampled dozens of times per second, with each sampling yielding slightly different results. If we could somehow translate that visual experience at the retinal level into a movie, the object under inspection would have a shimmering quality. Higher up in the visual processing system, that shimmering is filtered out, but much of the resolution and depth that we perceive in an object is derived from this "visual vibrato". I first encountered this effect twenty years ago while working on a primitive graphical display on the Atari 800 home computer. I wanted to show the face of a character, but my digitized source image had more resolution and pixel depth than my display. Rather than settling for a conventional bit-blit, I tried a dynamic scheme in which each output pixel's value was determined by a semi-randomly weighted sum of the associated pixels in the source image. In effect, I used time depth as a substitute for pixel depth. I had serendipitously triggered the human visual system's "visual vibrato", and the result struck viewers powerfully. The image had a much greater subjective power than any objective calculation would have awarded it. A particularly revealing example of the difference between optical reality and perceptual reality is the depiction of the human face. I am flabbergasted by the brilliant wrong-headedness of the graphics researchers who have captured in algorithmic form the detailed anatomy of the human face, including all the tiny muscles, the effects of skin tone, even pore structure. It is truly impressive work, but it strikes me as rather like a bridge built with titanium rivets and pig iron girders. The human visual system boasts a great deal of neural circuitry for facial recognition. It's hardwired in; infants a few days old can recognize faces. Those recognition algorithms utilize filtering algorithms that ruthlessly discard some visual information and amplify certain other facial features. Eyebrows, eyes, and mouth are heavily processed, while noses, ears, cheeks, and chins get short shrift. Ideally, the graphics algorithms we use should mirror the internal perceptual algorithms in the human visual system. At this point, some readers would agree with the ideal, but object that it is unrealizable, given that we have no data on those internal perceptual algorithms. Surely such data will become available with the progress of neurophysiological research, they might say, at which time we can incorporate the results into our algorithms. This argument overlooks a major source of data: the art of facial enhancement. We have thousands of years worth of expertise in the decoration and enhancement of the human face. Women don't use liner pencils to trace the outlines of their noses Ñ they trace their eyes. The three primary places of application of cosmetics are the eye region, the eyebrows, and the lips. That's because they're the places that human internal facial algorithms are keyed on. An even more useful source of information about those algorithms is the artistic representation of the human face. Artists mastered the photo realistic representation of the human face hundreds of years ago. Ever since then they have expanded their expressive frontiers by experimenting with new and different means of communicating the human face. They freely violate anatomical principles if it enhances the expressive power of their art. For example, the hands and feet on Michelangelo's statue of David are abnormally large, not because Michelangelo was an ignorant klutz, but because the statue was more beautiful Ñ expressed deeper truths Ñ with overlarge hands and feet. All good portraitists learn a variety of tricks for subtly altering the optical reality of a face to obtain better expressive value. The eyes of most characters in computer games are too small. Enhancing the eyes is the first task of the cosmetician and the first resort of the painter, yet computer-game images of faces show boringly realistic faces. It's time for the computer graphics field to include eye-enhancement in its standard display algorithms. So where are we to learn the tricks of the portraitist's trade? It might be worthwhile to consult with professional artists to learn directly from them, but I fear that their craft is so specialized that a computer scientist attempting a conversation would throw up his/her hands in frustration. Better to start at a simple level. Much good work in this direction has already been done. In the early 1980's, Dr. Susan Brennan published work on algorithmic morphing of facial features. Her work opened up a wide variety of possibilities, but I fear that most attention was devoted to morphing from one face to another. A more important pursuit, the morphing of a face from one expression to another, received less attention in the entertainment software field. I believe that the outside field most likely to yield useful results for entertainment software is that of comics. Comics artists are past masters at the art of representing the human face cleanly and well. Every computer graphics researcher working in the field of face generation should be familiar with this illustration from Scott McCloud's wondrous book, Understanding Comics.

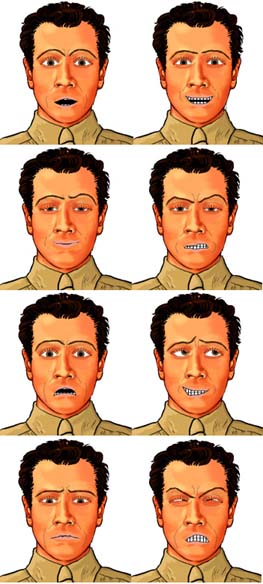

Ten years ago I dabbled with these problems as part of my work on interactive storytelling. I believed that human facial expressions are the most important dramatic feedback that a player can receive. I was able to come up with some interesting results. I'm sure that others have done much better, but perhaps by working on overly powerful machines they have underestimated what can be done on a home computer. Here are my results as of five years ago, when I stopped working on this line of development.

The overall conclusion should be clear: we should relax our obsession with optical reality and concentrate more attention on perceptual reality, at least in images concerned in any way with human emotions. If we want to communicate emotionally meaningful images, we need to listen more to the artists and less to the anatomists. --- Chris Crawford has designed and programmed more than a dozen published computer games. He wrote several books on game design and a great many articles in a wide variety of periodicals. He has lectured on computer game design all over the world. He founded and ran the Computer Game Developers' Conference. He is currently working on interactive storytelling, Leonid meteor research, and an analysis of the adages of Desiderius Erasmus of Rotterdam. He may be reached at chriscrawford@wave.net. Some of his writings are available in his library at www.erasmatazz.com.

|